Page History

...

MPI is a parallel program interface for explicitly passing messages between parallel processes – you must have added message passing constructs to your program. Then to enable your programs to use MPI, you must include the MPI header file in your source and link to the MPI libraries when you compile.

Compiling and Linking

The preferred MPI library is Open MPI. To see what versions are available type

| Code Block | ||

|---|---|---|

| ||

module avail openmpi |

module load openmpi for the default version or module load openmpi/<version> for version <version>) sets a variety of environment variables which you can see from | Code Block | ||

|---|---|---|

| ||

module show openmpi # for default version module show openmpi/<version> # for version <version> |

For Fortran, compile with one of the following commands:

| Code Block | ||

|---|---|---|

| ||

ifort myprog.f -o myprog.exe $OMPI_FLIBS mpif77 myprog.f -o myprog.exe mpif90 myprog.f90 -o myprog.exe |

The environment variable $OMPI_FLIBS has been set up to insert the correct libraries for linking. These are the same as is used by the wrapper functions mpif77 and mpif90. Passing -show option in the corresponding wrapper function gives the full list of options that the wrapper passes on to the backend compiler (e.g. by typing mpif77 -show for Intel MPI gives something like ifort -I/<path>/include -L/<path>/lib -Xlinker --enable-new-dtags -Xlinker -rpath -Xlinker /<path>/lib -Xlinker -rpath -Xlinker /<path>/mpi-rt/4.1 -lmpigf -lmpi -lmpigi -ldl -lrt -lpthread). If you are using the Fortran90 bindings for MPI (unlikely), then you need $OPENMPI_F90LIBS.

For C and C++, compile with one of:

| Code Block | ||

|---|---|---|

| ||

icc -pthread myprog.c -o myprog.exe $OMPI_CLIBS mpicc myprog.c -o myprog.exe icpc -pthread myprog.C -o myprog.exe $OMPI_CXXLIBS mpiCC myprog.C -o myprog.exe |

Note that $OMPI_CXXLIBS is only relevant if you are actually using the C++ bindings for MPI. Most C++ MPI applications use the C bindings so linking with $OMPI_CLIBS is sufficient.

As mentioned above, do not use the -fast option as this sets the -static option which conflicts with using the MPI libraries which are shared libraries. Alternatively, use -03 -ipo (which is equivalent to -fast without -static).

If you do not have an Intel compiler module loaded, the MPI compiler wrappers will use the GNU compilers by default. In that case, the following pairs of commands are equivalent:

| Code Block | ||

|---|---|---|

| ||

mpif90 myprog.F gfortran myprog.F $OMPI_FLIBS mpicc myprog.c gcc -pthread myprog.c $OMPI_CLIBS mpiCC myprog.C g++ -pthread myprog.C $OMPI_CXXLIBS |

Note that that the appropriate include paths are placed in the CPATH and FPATH environment variables when you load the openmpi module.

Running MPI Jobs

To run an MPI application, you need to have an MPI module loaded in your environment. The modules of software packages requiring MPI will generally load the appropriate MPI module for you. MPI programs are executed using the mpirun command. To run a small test with 4 processes (or tasks) where the MPI executable is called a.out, enter any of the following equivalent commands:

| Code Block | ||

|---|---|---|

| ||

mpirun -n 4 ./a.out mpirun -np 4 ./a.out |

The argument to -n or -np is the number of a.out processes that will be run.

For larger jobs and production use, submit a job to the PBS batch system using qsub <script name> command with a command script like the following (note that there is a carriage return after -l wd, module load openmpi and ./a.out):

| Code Block | ||

|---|---|---|

| ||

qsub#!/bin/bash #PBS -q expressnormal #PBS -l ncpus=16,32 #PBS -l mem=56GB #PBS -l walltime=00:20:00,mem=400mb #PBS -l wdjobfs=400GB module load openmpi/1.10.2 mpirun -np $PBS_NCPUS ./a.out ^D (that is control-D) |

By not specifying the

-np option with the batch job mpirun, mpiprun will start as many MPI processes as there have been cpus requested with qsub. It is possible to specify the number of processes on the batch job mpirun command, as mpirun -np 4 ./a.out, or more generally mpirun -np $PBS_NCPUS ./a.out, as shown in the above script.To improve performance on the NUMA nodes of raijin, both cpu and memory binding of MPI processes is performed by default with the current default version of Open MPI. If your application requires any non-standard layout (e.g. the ranks are threaded or some ranks require very large memory) then may require options to mpirun to avoid the default binding. In the extreme case, binding can be disabled with

| Code Block | language | bash

|---|

mpirun --bind-to-none -np 4 ./a.out |

however there may be more appropriate options.

Using OpenMP

OpenMP is an extension to standard Fortran, C and C++ to support shared memory parallel execution. Directives have to be added to your source code to parallelise loops and specify certain properties of variables. (Note that OpenMP and Open MPI are unrelated.)

Compiling and Linking

Fortran with OpenMP directives is compiled as:| Code Block | ||

|---|---|---|

| ||

ifort -openmp myprog.f -o myprog.exe gfortran -fopenmp -lgomp myprog.f -o myprog.exe |

C code with OpenMP directives is compiled as:

| Code Block | ||

|---|---|---|

| ||

icc -openmp myprog.c -o myprog.exe gcc -fopenmp -lgomp myprog.c -o myprog.exe |

Running OpenMP Jobs

Use the following script to run the OpenMP job on a Raijin Sandy Bridge node:

| Code Block | ||

|---|---|---|

| ||

#!/bin/bash #PBS -q normal #PBS -l ncpus=16 #PBS -l walltime=00:20:00 #PBS -l mem=28GB #PBS -l wd export OMP_NUM_THREADS=$PBS_NCPUS export GOMP_CPU_AFFINITY=0-15 ./myprog.exe |

OpenMP is a shared memory parallelism model – only one host (node) can be used to execute an OpenMP application. The Raijin clusters have Sandy Bridge nodes with 16 cpu cores. It makes no sense to try to run an OpenMP application on more than 16 processes on these nodes. Note that in the above example, the request specifies all 16 cpu cores of a node.

You should time your OpenMP code on a single processor then on increasing numbers of CPUs to find the optimal number of processors for running it. Keep in mind that your job is charged ncpus*walltime.

OpenMP Performance

Parallel loop overheads

There is an overhead in starting and ending any parallel work distribution construct – an empty parallel loop takes a lot longer than an empty serial loop. And that overhead in wasted time grows with the number of threads used. Meanwhile the time to do the real work has (hopefully) decreased by using more threads. So you can end up with timelines like the following for a parallel work distribuition region:

4 cpus 8 cpus time ---- ---- | startup startup | ---- V ---- work work ____ ____ cleanup cleanup ---- ----

Bottom line: the amount of work in a parallel loop (or section) has to be large compared with the startup time. You’re looking at 10’s of microseconds startup cost or the equivalent time for doing 1000’s of floating point ops. Given another order-of-magnitude because you’re splitting work over O(10) threads and at least another order-of-magnitude because you want the work to dominate over startup cost and very quickly you need O(million) ops in a parallelised loop to make it scale OK.

Common Problems

- One of the most common problems encountered after parallelizing a code is the generation of floating point exceptions or segmentation violations that were not occurring before. This is usually due to uninitialised variables – check your code very carefully.

Segmentation violations can also be caused by the thread stack size being too small. Change this by setting the environment variable OMP_STACKSIZE, for example,

Code Block language bash export OMP_STACKSIZE="10M"

Using MPI and OpenMP (Hybrid MPI+OpenMP)

Use the following script if you want to launch 1 MPI process per Raijin Sandy Bridge node, binding 16 cores per MPI process (binding to node) and running 16 OMP threads per MPI process:

| Code Block | ||

|---|---|---|

| ||

#!/bin/bash #PBS -q normal #PBS -l ncpus=32 #PBS -l walltime=00:20:00 #PBS -l mem=56GB #PBS -l wd module load openmpi/1.10.2 export OMP_NUM_THREADS=16 export GOMP_CPU_AFFINITY=0-15 NPROC=`expr $PBS_NCPUS / $OMP_NUM_THREADS` OMP_PARAM="--report-bindings --map-by ppr:1:node:pe=16 -x OMP_NUM_THREADS -x GOMP_CPU_AFFINITY" mpirun -np $NPROC $OMP_PARAM ./myprog.exe |

Since each Raijin Sandy Bridge node has 2 processor sockets each with 8 cores (i.e. a total of 16 cores), we can also launch 1 MPI process per socket, binding 8 cores per MPI process (binding to socket), running 8 OMP threads per MPI process and launching 2 MPI processes per node like the following:

| Code Block | ||

|---|---|---|

| ||

#!/bin/bash #PBS -q normal #PBS -l ncpus=32 #PBS -l walltime=00:20:00 #PBS -l mem=56GB #PBS -l wd module load openmpi/1.10.2 export OMP_NUM_THREADS=8 export GOMP_CPU_AFFINITY=0-15 NPROC=`expr $PBS_NCPUS / $OMP_NUM_THREADS` OMP_PARAM="--report-bindings --map-by ppr:1:socket:pe=8 -x OMP_NUM_THREADS -x GOMP_CPU_AFFINITY" mpirun -np $NPROC $OMP_PARAM ./myprog.exe |

Details about MPI process binding to cpu core is available at https://opus.nci.org.au/display/Help/Hybrid+MPI+OpenMP.

Code Development

Debugging

Intel debugger

idb debugger for C, C++ and Fortran as well as GNU C/C++ can be used in either DBX or GDB mode. It supports the debugging of simple programs, core files and code with multiple threads. The GNU debugger gdb is also available. Read man idb for further information.To use first compile and link your program using the

-gswitch e.g.Code Block language bash cc -g prog.c

Start the debugger

Code Block language bash idbc ./a.out

Enter commands such as

Code Block language bash ... (idb) list (idb) stop at 10 (idb) run (idb) print variable ... (idb) quit

- By starting

idbup with the option-gui(i.e.idbc -gui) you get a useful graphical user interface.

Coredump files

By default your jobs will not produce coredump files when they crash. To generate corefiles you need:

| Code Block | ||

|---|---|---|

| ||

limit coredumpsize unlimited (for tcsh) ulimit -c unlimited (for bash) |

Also if you are using the Intel Fortran compiler, you will will need

| Code Block | ||

|---|---|---|

| ||

setenv decfort_dump_flag y (for tcsh) export decfort_dump_flag=y (for bash) |

To use the coredump file, enter

| Code Block | ||

|---|---|---|

| ||

gdb /path/to/the/executable /path/to/the/coredumpfile ... (gdb) where (gdb) bt (gdb) frame number (gdb) list (gdb) info locals (gdb) print variable ... (gdb) quit |

Coredump files can take up a lot of disk space especially from a large parallel job – be careful not to generate them if you are not going to use them.

Debugging Parallel Programs

Totalview can be used to debug parallel MPI or OpenMP programs. Introductory information and userguides on using Totalview are available from this site.

Load module to use Totalview,

Code Block language bash module load totalview

Compile code with the

-goption. For example, for an MPI program,Code Block language bash mpif90 -g prog.f

Start Totalview. For example, to debug an MPI program using 4 processors the usual command is,

Code Block language bash mpirun --debug -np 4 ./a.out

as mpirun is a locally written wrapper.

Note that to ensure that Totalview can obtain information on all variables compile with no optimisation. This is the default if -g is used with no specific optimisation level.

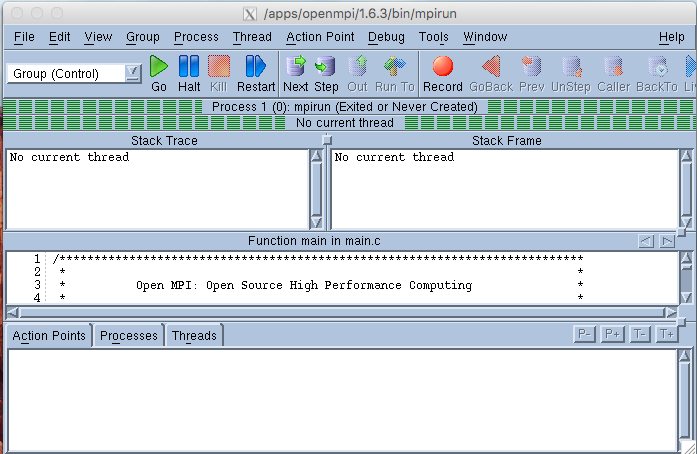

Totalview shows source code for mpirun when it first starts an MPI job. A GUI like the following is generated. Click on GO and all the processes will start up and you will be asked if you want to stop the parallel job. At this point click YES if you want to insert breakpoints. The source code will be shown and you can click on any lines where you wish to stop.

If your source code is in a different directory from where you fired up Totalview you may need to add the path to Search Path under the File Menu. Right clicking on any subroutine name will “dive” into the source code for that routine and break points can be set there.

The procedure for viewing variables in an MPI job is a little complicated. If your code has stopped at a breakpoint right click on the variable or array of interest in the stack frame window. If the variable cannot be displayed then choose “Add to expression list” and the variable will appear listed in a new window. If it is marked “Invalid compilation scope” in this new window right click again on the variable name in this window and chose “Compilation scope”. Change this to “Floating” and the value of the variable or array should appear. Right clicking on it again and chosing “Dive” will give you values for arrays. In this window you can chose “Laminate” then “Process” under the View menu to see the values on different processors.

Under the Tools option on the top toolbar of the window displaying the variable values you can choose Visualise to display the data graphically which can be useful for large arrays.

It is also possible to use Totalview for memory debugging, showing graphical representations of outstanding messages in MPI or setting action points based on evaluation of a user defined expression. See the Totalview User Guide for more information.

Alternatively, PADB, a light weight parallel program inspection/debug tool, is also available in NCI compute systems. For more information on its usage, please refer to Parallel Program Debugging User Guide.

For more information on memory debugging see here.

Notes on Benchmarking

Before running MPI jobs on many processors you should run some smaller benchmarking and timing tests to see how well your application scales. There is an art to this and the following points are things you should consider when setting up timing examples. For scaling tests you need a typically sized problem that does not take too long to run. So, for example, it may be possible to time just a few iterations of your application. Test runs should be replicated several times as there will be some variation in timings.

- NUMA page placement:

If the memory of a node is not empty when a job starts on it, there is a chance that pages will be placed badly. (Suspend/resume is an obvious “cause” of this problem but even after exiting, a job can leave a memory footprint (pagecache) meaning “idle” nodes can also cause problems.) A locally written modification to Open MPI 1.4.3 (now the default version of Open MPI) has memory binding set by default. Ask for help if you think you need this turned off. The good news is that the Open MPI developers will support memory binding in future releases.

Fix: Use Open MPI 1.4.3 or later. - Paging overhead:

Because of suspend/resume, there is a high probability that any reasonable size parallel job will have to (partially) page out at least some suspended jobs when starting up. In the worst case, this could take up to the order of a minute. In the case of a 3hr production job this is not an issue but it is significant for a 10 minute test job. There are various ways to avoid this:- just use the walltime of some later iteration/timestep as the benchmark measure and ignore the first couple of iterations

- run the mpirun command 2 or 3 times in the one PBS job and use the last run for timing. The first runs should be about a minute long.

- run “mpirun memhog size” where size is the real memory required per MPI task (takes an “m” or “g” suffix). Beware of causing excessive paging or getting your job killed by PBS by trying to memhog too much memory.

Fix: Clear memory for the job as above.

- Network interactions and locality:

Generally small and hard to avoid except in special cases. The scheduler does try to impose some degree of locality but that could be strengthened. It should also be possible to add a qsub option to request best locality. But the job would probably queue longer and could still see issues. For example we regularly have InfiniBand links down and, with IB static routing, that means some links are doing double duty.

Fix: New PBS option to request best locality or live with it. - IO interactions with other jobs:

This is almost impossible to control. There is usually lots of scope for tuning IO (both at the system level and in the job) so it certainly shouldn’t be ignored. But for benchmarking purposes:

Fix: do as little IO as possible and test the IO part separately. - Communication startup:

MPI communication is connection based meaning there is a fair amount of negotiation before the first message can be sent. There are ways to control when and how this happens (look for “mpi_preconnect_” in the output from “ompi_info -a”) but you cannot avoid this overhead.

Fix: try to discount or quantify the startup cost as discussed above.

Profiling

gprof

The gprof profiling tool is available for sequential codes.

The

gprofprofiler provides information on the most time-consuming subprograms in your code. Profiling the executableprog.exewill lead to profiling data being stored ingmon.outwhich can then be interpreted bygprofas follows:Code Block language bash ifort -p -o prog.exe prog.f ./prog.exe gprof ./prog.exe gmon.out

For the GNU compilers do

Code Block language bash gfortran -pg -o prog.exe prog.f gprof ./prog.exe gmon.out

gprof is not useful for parallel code, HPCToolKit will be used instead. More information on profiling for parallel code is available General Performance Analysis User Guide.

Graphical Profiling of MPI Code

Two lightweight MPI profilers, IPM and mpiP, are available for Open MPI parallel codes. A minimal user instruction is described below. For more detailed user guide, please refer MPI Performance Analysis User Guide.

IPM

ipm/0.983-nci- works with

openmpi/1.6.5or less - gives nice graph with communication pattern

- works with

ipm/0.983-cache- works with

openmpi/1.6.5or less - gives

L1 L2 L3cache misses

- works with

ipm/2.0.2- only works with

openmpi/1.7.*and1.8.* - the only version gives flops

- only works with

ipm/2.0.5- works with

openmpi/1.10.2

Code Block language bash module load openmpi module load ipm mpirun ./prog.exe

To view the IPM profile results (usually in the format of username.xxxxxxxxxx.xxxxxx.0):

Code Block language bash ssh -X abc123@raijin.nci.org.au (to enable remote display) ipm_view username.xxxxxxxxxx.xxxxxx.0

- works with

mpiP

mpiP/3.2.1mpiP/3.4.1Code Block language bash mpicc -g -o prog.exe prog.c (optional) module load openmpi module load mpiP mpirun -np $n ./prog.exe

To view the mpiP profile results (usually in the format of prog.exe.${n}.xxxxx.x.mpiP):

Code Block language bash ssh -X abc123@raijin.nci.org.au (to enable remote display) mpipview prog.exe.${n}.xxxxx.x.mpiP

...